Linguistic strategies for improving informed consent in clinical trials among low health literacy patients

Evidence-based guidance on how to improve informed consent processes for patients being invited to participate in clinical research.

| 0 Comments | Evaluated

Informed Health Choices Podcasts

Each episode includes a short story with an example of a treatment claim and a simple explanation of a Key Concept used to assess that claim

| 1 Comment | Evaluated

Informed Health Choices Primary School Resources

A textbook and a teachers’ guide for 10 to 12-year-olds. The textbook includes a comic, exercises and classroom activities.

| 0 Comments | Evaluated

Ebm@school – a curriculum of critical health literacy for secondary school students

A curriculum based on the concept of evidence-based medicine, which consists of six modules.

| 0 Comments | Evaluated

Confidence Intervals – CASP

The p-value gives no direct indication of how large or important the estimated effect size is. So, confidence intervals are often preferred.

| 0 Comments | Evaluated

Know Your Chances

This book has been shown in two randomized trials to improve peoples' understanding of risk in the context of health care choices.

| 0 Comments | Evaluated

Philosophy for Children (P4C)

P4C promotes high-quality classroom dialogue in response to children’s own questions about shared stories, films and other stimuli.

| 0 Comments | Evaluated

Thinking, talking, doing science

An experimental educational intervention in teaching science at primary schools.

| 0 Comments | Evaluated

Evidence for everyday health choices

A 17-min slide cast by Lynda Ware, on the history of EBM, what Cochrane is, and how to understand the real evidence behind the headlines.

| 0 Comments

Sunn Skepsis

Denne portalen er ment å gi deg som pasient råd om kvalitetskriterier for helseinformasjon og tilgang til forskningsbasert informasjon.

| 0 Comments

Dancing statistics: Explaining variance

A 5-minute film demonstrating the statistical concept of variance through dance.

| 0 Comments

Dancing statistics: sampling & standard error

A 5-minute film demonstrating the statistical concept of sampling and standard error through dance.

| 0 Comments

Don’t jump to conclusions, #Ask for Evidence

An introduction to the ‘Ask for Evidence’ initiative launched by ‘Sense about Science’ in 2016.

| 0 Comments

How can you know if the spoon works?

Short, small group exercise on how to design a fair comparison using the "claim" that a spoon helps retain the bubbles in champagne.

| 0 Comments

English National Curriculum vs Key Concepts – Key Stage 3

A linked spreadsheet showing how the Key Concepts map to the Science National Curriculum in England at Key Stage 3 (ages 11-14).

| 0 Comments

Calling Bullshit Syllabus

Carl Bergstrom's and Jevin West's nice syllabus for 'Calling Bullshit'.

| 0 Comments

Tom Hanks and Type 2 Diabetes

A 50-minute illustrated talk by James McCormack prompted by Tom Hanks’ announcement that he had been diagnosed with Type 2 diabetes.

| 0 Comments

‘Tricks to help you get the result you want from your study (S4BE)

Inspired by a chapter in Ben Goldacre’s ‘Bad Science’, medical student Sam Marks shows you how to fiddle research results.

| 0 Comments

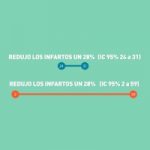

Reporting the findings: Absolute vs relative risk

Absolute Differences between the effects of two treatments matter more to most people than Relative Differences.

| 0 Comments

It’s just a phase

A resource explaining the differences between different trial phases.

| 0 Comments

Strictly Cochrane: a quickstep around research and systematic reviews

An interactive resource explaining how systematic and non-systematic reviews differ, and the importance of keeping reviews up to date.

| 0 Comments

The Princess and the p-value

An interactive resource introducing reporting and interpretation of statistics in controlled trials.

| 0 Comments

Teach Yourself Cochrane

Tells the story behind Cochrane and the challenges finding good quality evidence to produce reliable systematic reviews.

| 3 Comments

Explaining the mission of the AllTrials Campaign (TED talk)

Half the clinical trials of medicines we use haven’t been published. Síle Lane shows how the AllTrials Campaign is addressing this scandal.

| 0 Comments

Building evidence into education

Ben Goldacre explains why appropriate infrastructure is need to do clinical trials of sufficient rigour and size to yield reliable results.

| 0 Comments

Dodgy academic PR

Ben Goldacre: 58% of all press releases by academic institutions lacked relevant cautions and caveats about the methods and results reported

| 0 Comments

Over there! An 8 mile high distraction made of posh chocolate!

Ben Goldcare illustrates strategies used by vested interests to discredit research with ‘inconvenient’ results.

| 0 Comments

Brain imaging studies report more positive findings than their numbers can support. This is fishy.

Ben Goldacre explores how twice as many positive findings as could realistically have been expected from the data reported may have occurred

| 0 Comments

What if academics were as dumb as quacks with statistics?

Ben Goldacre introduces a statistical error that appears in about half of all the published papers in academic neuroscience research.

| 0 Comments

The strange case of the magnetic wine

Ben Goldacre shows how claims for the wine-maturing effects of magnets could be assessed with 50 people in an evening.

| 0 Comments

Sampling error, the unspoken issue behind small number changes in the news

Ben Goldacre stresses the importance of taking account of “sampling variability” and confidence intervals.

| 0 Comments

The certainty of chance

Ben Goldacre reminds readers how associations may simply reflect the play of chance, and describes Deming’s illustration of this.

| 0 Comments

How myths are made

Ben Goldacre draws attention to Steven Greenberg’s forensically based illustration of citation biases.

| 0 Comments

Cherry picking is bad. At least warn us when you do it.

Ben Goldacre illustrates how biased ‘cherry picking’ and choosing from the relevant evidence can result in unreliable conclusions.

| 0 Comments

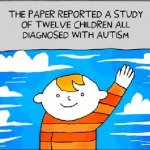

The Man Who Swallowed The Pea, and Other Tall Tales

Tamara Ingamells’ lesson plan using the claim that MMR vaccination causes autism to help teenagers understand the importance of biases.

| 0 Comments

Dragon Lesson Plan to investigate multivariate categorical data

Investigating multivariate data by sorting and organising a set of dragon cards to uncover information about the set.

| 0 Comments

Fast Stats to explain absolute risk, relative risk and Number Needed to Treat (NNT).

A 15-slide presentation on ‘Fast Stats’ to explain absolute risk, relative risk and Number Needed to Treat (NNT) prepared by PharmedOut.

| 0 Comments

Unsubstantiated and overstated claims of efficacy

A 32-slide presentation on misleading advertisements and FDA warnings prepared by PharmedOut.

| 0 Comments

Critical appraisal

University of New South Wales Medical Statistics Tutorial 4 addresses Critical Appraisal.

| 0 Comments

Probability and tests of statistical significance

University of New South Wales Medical Statistics Tutorial 6 addresses ‘Probability and tests of statistical significance’.

| 0 Comments

Bias – the biggest enemy

University of New South Wales Medical Stats Online Tutorial 5 addresses ‘Bias - the biggest enemy’.

| 0 Comments

Generation R – Pictionary research activity

GenerationR’s version of Pictionary using research concepts instead of usual game cards, allocated in different levels of difficulty.

| 0 Comments

Generation R – Clinical trials card-sorting exercise

Card-sorting exercise developed by GenerationR to familiarise children and young people with jargon terms used by clinical researchers.

| 0 Comments

Clinical Research Workshop

‘Clinical Research Workshop’ developed for young people by the Centre of the Cell.

| 0 Comments

Introduction to Evidence-Based Medicine

Bill Caley’s 26 slides with notes used as an ‘Introduction to Evidence-Based Medicine’.

| 0 Comments

2×2 tables and relative risk

A 10-min talk on ‘2x2 tables and Relative Risk’, illustrated by 14 slides, with notes.

| 0 Comments

Appraisal of evidence and interpretation of results

A 14-min talk on ‘Appraisal of the Evidence and Interpretation of the Results’, illustrated by 19 slides, with notes.

| 0 Comments

Basic principles of randomised trials, and validity

A 8-min talk on ‘Basic principles of Randomised Trials, and Validity’, illustrated by 15 slides, with notes.

| 0 Comments

Defining clinical questions

An 8-min talk on ‘Defining Clinical Questions’ illustrated by 10 slides, with notes.

| 0 Comments

A way to teach about systematic reviews

81 slides used by David Nunan (Centre for Evidence-Based Medicine, Oxford) to present ‘A way to teach about systematic reviews’.

| 0 Comments

Applying the evidence

Six key slides produced by the University of Western Australia on applying evidence in practice.

| 0 Comments

Appraising the evidence

Six key slides produced by the University of Western Australia to introduce critical appraisal.

| 0 Comments

The power of the placebo effect

Emma Bryce’s video presents information about placebo effects: treatments not supposed to have an effect but which make people feel better.

| 0 Comments

Detectives in the classroom

Five modules of materials for promoting epidemiology among high school students.

| 0 Comments

Not all scientific studies are created equally

David Schwartz dissects two types of studies that scientists use, illuminating why you should always approach claims with a critical eye.

| 1 Comment

Taking account of the play of chance

Differences in outcome events in treatment comparisons may reflect only the play of chance. Increased numbers of events reduces this problem

| 0 Comments

Quantifying uncertainty in treatment comparisons

Small studies in which few outcome events occur are usually not informative and the results are sometimes seriously misleading.

| 0 Comments

Bringing it all together for the benefit of patients and the public

Improving reports of research and up-to-date systematic reviews of reliable studies are essential foundations of effective health care.

| 0 Comments

Applying the results of trials and systematic reviews to individual patients

Paul Glasziou uses 28 slides to address ‘Applying the results of trials and systematic reviews to individual patients’.

| 0 Comments

10 Components of effective clinical epidemiology: How to get started

PDF & Podcast of 1-hr talk by Carl Heneghan (Centre for Evidence-Based Medicine, Oxford) on effective clinical epidemiology.

| 0 Comments

Making the most of the evidence in education

A pamphlet to guide people using research evidence when deliberating about educational policies.

| 0 Comments

Critical appraisal of clinical trials

Slides developed by Amanda Burls for an interactive presentation covering the most important features of well controlled trials.

| 0 Comments

Caffeine Soft Drinks affect Human Heart Rate. Lesson Plan

A lesson to illustrate how medical researchers study the effects of drugs on people.

| 0 Comments

Life saving maths: How does vaccination work?

Vaccinating a large enough proportion of children means everyone is protected, including those who can't be vaccinated.

| 0 Comments

How to work out whether bacon sandwiches are harmful

The headline said there is a 20% greater risk of getting bowel cancer if you eat bacon sandwiches! Are they right?

| 0 Comments

Explaining the unbiased creation of treatment comparison groups and blinded outcome assessment

A class were given coloured sweets and asked to design an experiment to find out whether red sweets helped children to think more quickly.

| 0 Comments

Investigating how to remove bacteria from hands

Investigate the best way to remove bacteria from your hands.

| 0 Comments

Tips for understanding Intention-to-Treat analysis

Ignoring non-compliance with assigned treatments leads to biased estimates of treatment effects. ITT analysis reduces these biases.

| 0 Comments

Tips for understanding Absolute vs. Relative Risk

Absolute Differences between the effects of two treatments matter more to most people than Relative Differences.

| 0 Comments

Applying Systematic Reviews

How useful are the results of trials in a systematic review when it comes to weighing up treatment choices for particular patients?

| 0 Comments

Systematic Reviews and Meta-analysis: Information Overload

None of us can keep up with the sheer volume of material published in medical journals each week.

| 0 Comments

Combining the Results from Clinical Trials

Chris Cates notes that emphasizing the results of patients in particular sub-groups in a trial can be misleading.

| 0 Comments

How Science Works

Definitions of terms that students have to know for 'How Science Works' and associated coursework, ISAs, etc

| 0 Comments

GenerationR – The importance of involving children and young people in research

3/3, 22-min video at the launch of GenerationR, a network of young people who advise researchers.

| 0 Comments

Generation R – The importance of medical research in children and young people

2/3, 35-min video at the launch of GenerationR, a network of young people who advise researchers.

| 0 Comments

Generation R – The need to reduce waste in clinical research involving children

1/3, 14-min video at the launch of GenerationR, a network of young people who advise researchers.

| 0 Comments

No Power, No Evidence!

This blog explains that studies need sufficient statistical power to detect a difference between groups being compared.

| 0 Comments

Beginners guide to interpreting odds ratios, confidence intervals and p values

A tutorial on interpreting odds ratios, confidence intervals and p-values, with questions to test the reader’s knowledge of each concept.

| 0 Comments

Sample Size matters even more than you think

This blog explains why adequate sample sizes are important, and discusses research showing that sample size may affect effect size.

| 0 Comments

What is it with Odds and Risk?

This blog explains odds ratios and relative risks, and provides the formulae for calculating both measures.

| 0 Comments

The Systematic Review

This blog explains what a systematic review is, the steps involved in carrying one out, and how the review should be structured.

| 0 Comments

The Mean: Simply Average?

This blog explains ‘the mean’ as a measure of average; describes how to calculate it; and flags up some caveats.

| 0 Comments

Publication Bias: An Editorial Problem?

A blog challenging the idea that publication bias mainly occurs at editorial level, after research has been submitted for publication.

| 0 Comments

The Bias of Language

Publication of research findings in a particular language may be prompted by the nature and direction of the results.

| 0 Comments

Defining Bias

This blog explains what is meant by ‘bias’ in research, focusing particularly on attrition bias and detection bias.

| 0 Comments

Data Analysis Methods

A discussion of 2 approaches to data analysis in trials - ‘As Treated’, and ‘Intention-to-Treat’ - and some of the pros and cons of each.

| 0 Comments

Defining Risk

This blog defines ‘risk’ in relation to health, and discusses some the difficulties in applying estimates of risk to a given individual.

| 0 Comments

Traditional Reviews vs. Systematic Reviews

This blog outlines 11 differences between systematic and traditional reviews, and why systematic reviews are preferable.

| 0 Comments

P Value in Plain English

Using simple terms and examples, this blog explains what p-values mean in the context of testing hypotheses in research.

| 0 Comments

Making sense of randomized trials

A description of how clinical trials are constructed and analysed to ensure they provide fair comparisons of treatments.

| 0 Comments

Randomized Control Trials

1/2, 40-min lecture on randomized trials by Dr R Ramakrishnan (Lecture 25) for the Central Coordinated Bioethics Programme in India.

| 0 Comments

Compliance with protocol and follow-up in clinical trials

Denis Black’s 10-min, downloadable, PowerPoint presentation on compliance, follow up, and intention-to-treat analysis in clinical trials.

| 0 Comments

Clinical Significance – CASP

To understand results of a trial it is important to understand the question it was asking.

| 0 Comments

Statistical Significance – CASP

In a well-conducted randomized trial, the groups being compared should differ from each other only by chance and by the treatment received.

| 0 Comments

P Values – CASP

Statistical significance is usually assessed by appeal to a p-value, a probability, which can take any value between 0 and 1 (certain).

| 0 Comments

Making sense of results – CASP

This module introduces the key concepts required to make sense of statistical information presented in research papers.

| 0 Comments

Randomised Control Trials – CASP

This module looks at the critical appraisal of randomised trials.

| 0 Comments

Common Sources of Bias

Bias (the conscious or unconscious influencing of a study and its results) can occur in different ways and renders studies less dependable.

| 0 Comments

Tamiflu: securing access to medical research data

A campaign by researchers has shown that Roche spun the research on Tamiflu to meet their commercial ends.

| 0 Comments

MMR: the facts in the case of Dr Andrew Wakefield

This 15-page cartoon explains the events surrounding the MMR controversy, and provides links to the relevant evidence.

| 5 Comments

Los intervalos de confianza en investigación

¿Para qué sirven los intervalos de confianza en los estudios de investigación?

| 0 Comments

The need to compare like-with-like in treatment comparisons

Allocation bias results when trials fail to ensure that, apart from the treatments being compared, ‘like will be compared with like'.

| 0 Comments

Why avoiding differences between treatments allocated and treatments received is important

Knowledge of which treatments have been received by which study participants can affect adherence to assigned treatments and result in bias.

| 0 Comments

The need to avoid differences in the way treatment outcomes are assessed

Biased treatment outcome assessment can result if people know which participants have received which treatments.

| 0 Comments

Avoiding biased selection from the available evidence

Systematic reviews are used to identify, evaluate and summarize all the evidence relevant to addressing a particular question.

| 0 Comments

Preparing and maintaining systematic reviews of all the relevant evidence

Unbiased, up-to-date systematic reviews of all the relevant, reliable evidence are needed to inform practice and policy.

| 0 Comments

Dealing with biased reporting of the available evidence

Biased reporting of research occurs when the direction or statistical significance of results influences how research is reported.

| 0 Comments

Using the results of up-to-date systematic reviews of research

Trustworthy evidence from research is necessary, but not sufficient, to improve the quality of health care.

| 0 Comments

Introduction to JLL Explanatory Essays

Professionals sometimes harm patients by using inadequately evaluated treatments. Research addressing uncertainties can reduce this harm.

| 0 Comments

Avoiding biased treatment comparisons

Biases in tests of treatments are those factors that can lead to conclusions that are systematically different from the truth.

| 0 Comments

Bias introduced after looking at study results

Biases can be introduced when knowledge of the results of studies influences analysis and reporting decisions.

| 0 Comments

Why comparisons must address genuine uncertainties

Too much research is done when there are no genuine uncertainties about treatment effects. This is unethical, unscientific, and wasteful.

| 0 Comments

Interpreting 95% Confidence Intervals

Gilbert Welch’s 9-min video on how 95% confidence intervals relate to p values.

| 0 Comments

Why treatment comparisons are essential

Formal comparisons are required to assess treatment effects and to take account of the natural course of health problems.

| 0 Comments

Why treatment uncertainties should be addressed

Ignoring uncertainties about the effects of treatments has led to avoidable suffering and deaths.

| 0 Comments

‘Ask for Evidence’ lesson plan

A lesson plan and resources to give 13-16 year olds the opportunity to explore if what they see, read, and hear is true.

| 0 Comments

What are systematic reviews?

A 3-min video by Jack Nunn and The Cochrane Consumers and Communication group for people unfamiliar with the concept of systematic reviews.

| 0 Comments

Interactive PowerPoint Presentation about Clinical Trials

An interactive Powerpoint presentation for people thinking about participating in a clinical trial or interested in learning about them.

| 0 Comments