Confidence Intervals – CASP

The p-value gives no direct indication of how large or important the estimated effect size is. So, confidence intervals are often preferred.

| 0 Comments | Evaluated

Know Your Chances

This book has been shown in two randomized trials to improve peoples' understanding of risk in the context of health care choices.

| 0 Comments | Evaluated

How can you know if the spoon works?

Short, small group exercise on how to design a fair comparison using the "claim" that a spoon helps retain the bubbles in champagne.

| 0 Comments

DRUG TOO

James McCormick with another parody/spoof of the Cee Lo Green song ‘Forget You’ to prompt scepticism about many drug treatments.

| 0 Comments

Calling Bullshit Syllabus

Carl Bergstrom's and Jevin West's nice syllabus for 'Calling Bullshit'.

| 0 Comments

‘Tricks to help you get the result you want from your study (S4BE)

Inspired by a chapter in Ben Goldacre’s ‘Bad Science’, medical student Sam Marks shows you how to fiddle research results.

| 0 Comments

It’s just a phase

A resource explaining the differences between different trial phases.

| 0 Comments

Strictly Cochrane: a quickstep around research and systematic reviews

An interactive resource explaining how systematic and non-systematic reviews differ, and the importance of keeping reviews up to date.

| 0 Comments

The Princess and the p-value

An interactive resource introducing reporting and interpretation of statistics in controlled trials.

| 0 Comments

Teach Yourself Cochrane

Tells the story behind Cochrane and the challenges finding good quality evidence to produce reliable systematic reviews.

| 3 Comments

Explaining the mission of the AllTrials Campaign (TED talk)

Half the clinical trials of medicines we use haven’t been published. Síle Lane shows how the AllTrials Campaign is addressing this scandal.

| 0 Comments

Fast Stats to explain absolute risk, relative risk and Number Needed to Treat (NNT).

A 15-slide presentation on ‘Fast Stats’ to explain absolute risk, relative risk and Number Needed to Treat (NNT) prepared by PharmedOut.

| 0 Comments

Unsubstantiated and overstated claims of efficacy

A 32-slide presentation on misleading advertisements and FDA warnings prepared by PharmedOut.

| 0 Comments

Critical appraisal

University of New South Wales Medical Statistics Tutorial 4 addresses Critical Appraisal.

| 0 Comments

Probability and tests of statistical significance

University of New South Wales Medical Statistics Tutorial 6 addresses ‘Probability and tests of statistical significance’.

| 0 Comments

Bias – the biggest enemy

University of New South Wales Medical Stats Online Tutorial 5 addresses ‘Bias - the biggest enemy’.

| 0 Comments

Introduction to Evidence-Based Medicine

Bill Caley’s 26 slides with notes used as an ‘Introduction to Evidence-Based Medicine’.

| 0 Comments

Applying evidence to patients

A 27-minute talk on ‘Applying Evidence to Patients’, illustrated by 17 slides, with notes.

| 0 Comments

2×2 tables and relative risk

A 10-min talk on ‘2x2 tables and Relative Risk’, illustrated by 14 slides, with notes.

| 0 Comments

Appraisal of evidence and interpretation of results

A 14-min talk on ‘Appraisal of the Evidence and Interpretation of the Results’, illustrated by 19 slides, with notes.

| 0 Comments

Basic principles of randomised trials, and validity

A 8-min talk on ‘Basic principles of Randomised Trials, and Validity’, illustrated by 15 slides, with notes.

| 0 Comments

Defining clinical questions

An 8-min talk on ‘Defining Clinical Questions’ illustrated by 10 slides, with notes.

| 0 Comments

A way to teach about systematic reviews

81 slides used by David Nunan (Centre for Evidence-Based Medicine, Oxford) to present ‘A way to teach about systematic reviews’.

| 0 Comments

Applying the evidence

Six key slides produced by the University of Western Australia on applying evidence in practice.

| 0 Comments

Appraising the evidence

Six key slides produced by the University of Western Australia to introduce critical appraisal.

| 0 Comments

Taking account of the play of chance

Differences in outcome events in treatment comparisons may reflect only the play of chance. Increased numbers of events reduces this problem

| 0 Comments

Quantifying uncertainty in treatment comparisons

Small studies in which few outcome events occur are usually not informative and the results are sometimes seriously misleading.

| 0 Comments

Bringing it all together for the benefit of patients and the public

Improving reports of research and up-to-date systematic reviews of reliable studies are essential foundations of effective health care.

| 0 Comments

Applying the results of trials and systematic reviews to individual patients

Paul Glasziou uses 28 slides to address ‘Applying the results of trials and systematic reviews to individual patients’.

| 0 Comments

10 Components of effective clinical epidemiology: How to get started

PDF & Podcast of 1-hr talk by Carl Heneghan (Centre for Evidence-Based Medicine, Oxford) on effective clinical epidemiology.

| 0 Comments

Critical appraisal of clinical trials

Slides developed by Amanda Burls for an interactive presentation covering the most important features of well controlled trials.

| 0 Comments

Explaining the unbiased creation of treatment comparison groups and blinded outcome assessment

A class were given coloured sweets and asked to design an experiment to find out whether red sweets helped children to think more quickly.

| 0 Comments

Applying Systematic Reviews

How useful are the results of trials in a systematic review when it comes to weighing up treatment choices for particular patients?

| 0 Comments

Systematic Reviews and Meta-analysis: Information Overload

None of us can keep up with the sheer volume of material published in medical journals each week.

| 0 Comments

Combining the Results from Clinical Trials

Chris Cates notes that emphasizing the results of patients in particular sub-groups in a trial can be misleading.

| 0 Comments

Compliance with protocol and follow-up in clinical trials

Denis Black’s 10-min, downloadable, PowerPoint presentation on compliance, follow up, and intention-to-treat analysis in clinical trials.

| 0 Comments

Clinical Significance – CASP

To understand results of a trial it is important to understand the question it was asking.

| 0 Comments

Statistical Significance – CASP

In a well-conducted randomized trial, the groups being compared should differ from each other only by chance and by the treatment received.

| 0 Comments

P Values – CASP

Statistical significance is usually assessed by appeal to a p-value, a probability, which can take any value between 0 and 1 (certain).

| 0 Comments

Making sense of results – CASP

This module introduces the key concepts required to make sense of statistical information presented in research papers.

| 0 Comments

Screening – CASP

This module on screening has been designed to help people evaluate screening programmes.

| 0 Comments

Randomised Control Trials – CASP

This module looks at the critical appraisal of randomised trials.

| 0 Comments

Common Sources of Bias

Bias (the conscious or unconscious influencing of a study and its results) can occur in different ways and renders studies less dependable.

| 0 Comments

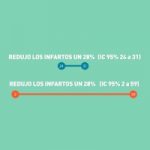

Los intervalos de confianza en investigación

¿Para qué sirven los intervalos de confianza en los estudios de investigación?

| 0 Comments

Watson en busca de la evidencia

Cómic acerca de conflictos de intereses y búsqueda de información.

| 0 Comments

The need to compare like-with-like in treatment comparisons

Allocation bias results when trials fail to ensure that, apart from the treatments being compared, ‘like will be compared with like'.

| 0 Comments

Avoiding biased selection from the available evidence

Systematic reviews are used to identify, evaluate and summarize all the evidence relevant to addressing a particular question.

| 0 Comments

Preparing and maintaining systematic reviews of all the relevant evidence

Unbiased, up-to-date systematic reviews of all the relevant, reliable evidence are needed to inform practice and policy.

| 0 Comments

Dealing with biased reporting of the available evidence

Biased reporting of research occurs when the direction or statistical significance of results influences how research is reported.

| 0 Comments

Why treatment comparisons must be fair

Fair treatment comparisons avoid biases and reduce the effects of the play of chance.

| 0 Comments

Bias introduced after looking at study results

Biases can be introduced when knowledge of the results of studies influences analysis and reporting decisions.

| 0 Comments

Reducing biases in judging unanticipated effects of treatments

As with anticipated effects of treatments, biases and the play of chance must be reduced in assessing suspected unanticipated effects.

| 0 Comments

Video games and health improvement: a literature review of randomized controlled trials

This is a critical appraisal of a non-systematic review of randomized trials of video games for improving health.

| 0 Comments

Why comparisons must address genuine uncertainties

Too much research is done when there are no genuine uncertainties about treatment effects. This is unethical, unscientific, and wasteful.

| 0 Comments

Why treatment comparisons are essential

Formal comparisons are required to assess treatment effects and to take account of the natural course of health problems.

| 0 Comments

Why treatment uncertainties should be addressed

Ignoring uncertainties about the effects of treatments has led to avoidable suffering and deaths.

| 0 Comments

What are systematic reviews?

A 3-min video by Jack Nunn and The Cochrane Consumers and Communication group for people unfamiliar with the concept of systematic reviews.

| 0 Comments

Understanding Overdiagnosis bias

Gilbert Welch’s 14-min video discussing the risks of overdiagnosis bias and screening.

| 0 Comments

Calculating and interpreting absolute and relative change in an unwanted outcome after treatment

Gilbert Welch’s 6-min video explaining how to calculate and interpret absolute and relative change in an unwanted outcome.

| 0 Comments

Testing Treatments Audio Book

The Testing Treatments Audiobook enables visitors to the TTi site to select whichever chapters in the book they would like to listen to.

| 0 Comments

Double blind studies

A webpage discussing the importance of blinding trial participants and researchers to intervention allocation.

| 0 Comments

CEBM – Study Designs

A short article explaining the relative strengths and weaknesses of different types of study design for assessing treatment effects.

| 0 Comments

John Ioannidis, the scourge of sloppy science

A 8 min podcast interview with John Ioannidis explaining how research claims can be misleading.

| 0 Comments

Science Weekly Podcast – Ben Goldacre

A 1-hour audio interview with Ben Goldacre discussing misleading claims about research.

| 0 Comments

Type I and Type II errors, and how statistical tests can be misleading

Gilbert Welch’s 12-min video explaining Type I and Type II errors, and how statistical tests can be misleading.

| 0 Comments

How do you know which healthcare research you can trust?

A detailed guide to study design, with learning objectives, explaining some sources of bias in health studies.

| 0 Comments

CASP: making sense of evidence

The Critical Appraisal Skills Programme (CASP) website with resources for teaching critical appraisal.

| 0 Comments

In defence of systematic reviews of small trials

An article discussing the strengths and weaknesses of systematic reviews of small trials.

| 0 Comments

Mega-trials

In this 5 min audio resource, Neeraj Bhala discusses systematic reviews and the impact of mega-trials.

| 0 Comments

Relative or absolute measures of effects

Dr Chris Cates' article explaining absolute and relative effects of treatment effects.

| 0 Comments

Evidence from Randomised Trials and Systematic Reviews

Dr Chris Cates' article discussing control of bias in randomised trials and explaining systematic reviews.

| 0 Comments

The perils and pitfalls of subgroup analysis

Dr Chris Cates' article demonstrating why subgroup analysis can be untrustworthy.

| 0 Comments

Reporting results of studies

Dr Chris Cates' article discussing how to report study results, with emphasis on P-values and confidence intervals.

| 0 Comments

Reducing the play of chance using meta-analysis

Combining data from similar studies (meta-analysis) can help to provide statistically more reliable estimates of treatment effects.

| 0 Comments

AllTrials: All Trials Registered | All Results Reported

AllTrials aims to correct the situation in which studies remain unpublished or are published but with selective reporting of outcomes.

| 0 Comments

Routine use of unvalidated therapy is less defensible than careful research to assess the effects of those treatments

It is more difficult to obtain consent to give a treatment in a clinical trial than to give the same treatment for patients in practice.

| 2 Comments

Evidence Based Medicine Matters: Examples of where EBM has benefitted patients

Booklet containing 15 examples submitted by Royal Colleges where Evidence-Based Medicine has benefited clinical practice.

| 0 Comments

Big data and finding the evidence

“Big data” is large-scale data processing technologies intended to generate insights into performance, behaviour and trends.

| 0 Comments

Some Studies That I Like to Quote

This short music video encourages health professionals to use evidence to help reach treatment decisions in partnership with patients.

| 0 Comments

What does the Cochrane logo tell us?

This video and animated slide presentation prepared by Steven Woloshin shows how the Cochrane logo was developed, and what it tells us.

| 3 Comments

On taking a good look at ourselves

Iain Chalmers talks about failings in scientific research that lead to avoidable harm to patients and waste of resources.

| 1 Comment

Communicating with patients on evidence

This discussion paper from the US Institute of Medicine provides guidance on communicating evidence to patients.

| 0 Comments

Surgery for the treatment of psychiatric illness: the need to test untested theories

Simon Wessely describes the untested theory of autointoxication, which arose in the 1890s and caused substantial harm to patients.

| 0 Comments

What did James Lind do in 1747?

A 2 minute Video clip of a BBC documentary recreating James Lind's celebrated experiment to test treatments for scurvy.

| 2 Comments

Connecting researchers with people who want to contribute to research

People in Research connects researchers who want to involve members of the public with members of the public who want to get involved.

| 0 Comments

New – but is it better?

Key points Testing new is necessary because new treatments are as likely to be worse as they are to be […]

| 0 CommentsNo Resources Found

Try clearing your filters or selecting different ones.